Lin Chen Graduated

The final doctoral examination for Lin Chen will take place on Wednesday, March 11th, at 2:00pm in HLH17, Room 335.

The title of the thesis is: Online Optimization: Convex and Submodular Functions

Advisor: Amin Karbasi

Members of the Committee are:

- Professor Negahban

- Professor Spielman

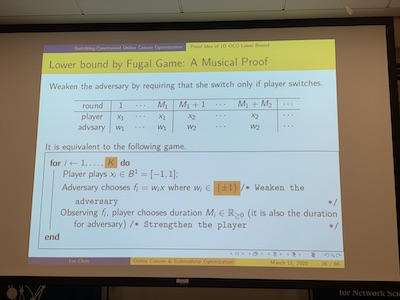

Abstract: In the first part, we study switching-constrained online convex optimization (OCO), where the player has a limited number of opportunities to change her action. While the discrete analog of this online learning task has been studied extensively, previous work in the continuous setting has neither established the minimax rate nor algorithmically achieved it. We here show that T-round switching-constrained OCO with fewer than K switches has a minimax regret of $\Theta(\frac{T}{\sqrt{K}})$ . In particular, it is at least $ \frac{T}{\sqrt{2K}}$ for one dimension and at least $ \frac{T}{\sqrt{K}}$ for higher dimensions. The lower bound in higher dimensions is attained by an orthogonal subspace argument. The minimax analysis in one dimension is more involved. To establish the one-dimensional result, we introduce the fugal game relaxation, whose minimax regret lower bounds that of switching-constrained OCO. We show that the minimax regret of the fugal game is at least $ \frac{T}{\sqrt{2K}}$ and thereby establish the minimax lower bound in one dimension. To establish the dimension-independent upper bound, we next show that a mini-batching algorithm provides an $ \mathcal{O}(\frac{T}{\sqrt{K}})$ upper bound, and therefore we conclude that the minimax regret of switching-constrained OCO is $\Theta(\frac{T}{\sqrt{K}})$ for any K. This is in sharp contrast to its discrete counterpart, the switching-constrained prediction-from-experts problem, which exhibits a phase transition in minimax regret between the low-switching and high-switching regimes. In the case of bandit feedback, we first determine a novel linear (in T) minimax regret for bandit linear optimization against the strongly adaptive adversary of OCO, implying that a slightly weaker adversary is appropriate. Then by a similar subspace lower bound and mini-batching upper bound, we also establish the minimax regret of switching-constrained bandit convex optimization in dimension n>2 to be $\hat{\Theta}(\frac{T}{\sqrt{K}})$ .

In the second part, we consider online continuous submodular maximization. We first propose a variant of the Frank-Wolfe algorithm that has access to the full gradient of the objective functions. We show that it achieves a regret bound of $\mathcal{O}(\sqrt{T})$ (where T is the horizon of the online optimization problem) against a (1-1/e)-approximation to the best feasible solution in hindsight.

In the third part, we improve our results in the second part and propose three online algorithms for submodular maximization. The first one, Mono-Frank-Wolfe, reduces the number of per-function gradient evaluations from $\sqrt{T}$ and $T^{3/2}$ to 1, and achieves a (1-1/e)-regret bound of $T^{4/5}$ . The second one, Bandit-Frank-Wolfe, is the first bandit algorithm for continuous DR-submodular maximization, which achieves a (1-1/e)-regret bound of $T^{8/9}$ . Finally, we extend Bandit-Frank-Wolfe to a bandit algorithm for discrete submodular maximization, Responsive-Frank-Wolfe, which attains a (1-1/e)-regret bound of $T^{8/9}$ in the responsive bandit setting.