Submodular Optimization

The difficulty of searching through a massive amount of data in order to quickly make an informed decision is one of today’s most ubiquitous challenges. Many scientific and engineering models feature inherently discrete decision variables—from phrases in a corpus to objects in an image. Similarly, nearly all aspects of the machine learning pipeline involve discrete tasks, from data summarization and sketching to feature selection and model explanation. The study of how to make near-optimal decisions from a massive pool of possibilities is at the heart of combinatorial optimization.

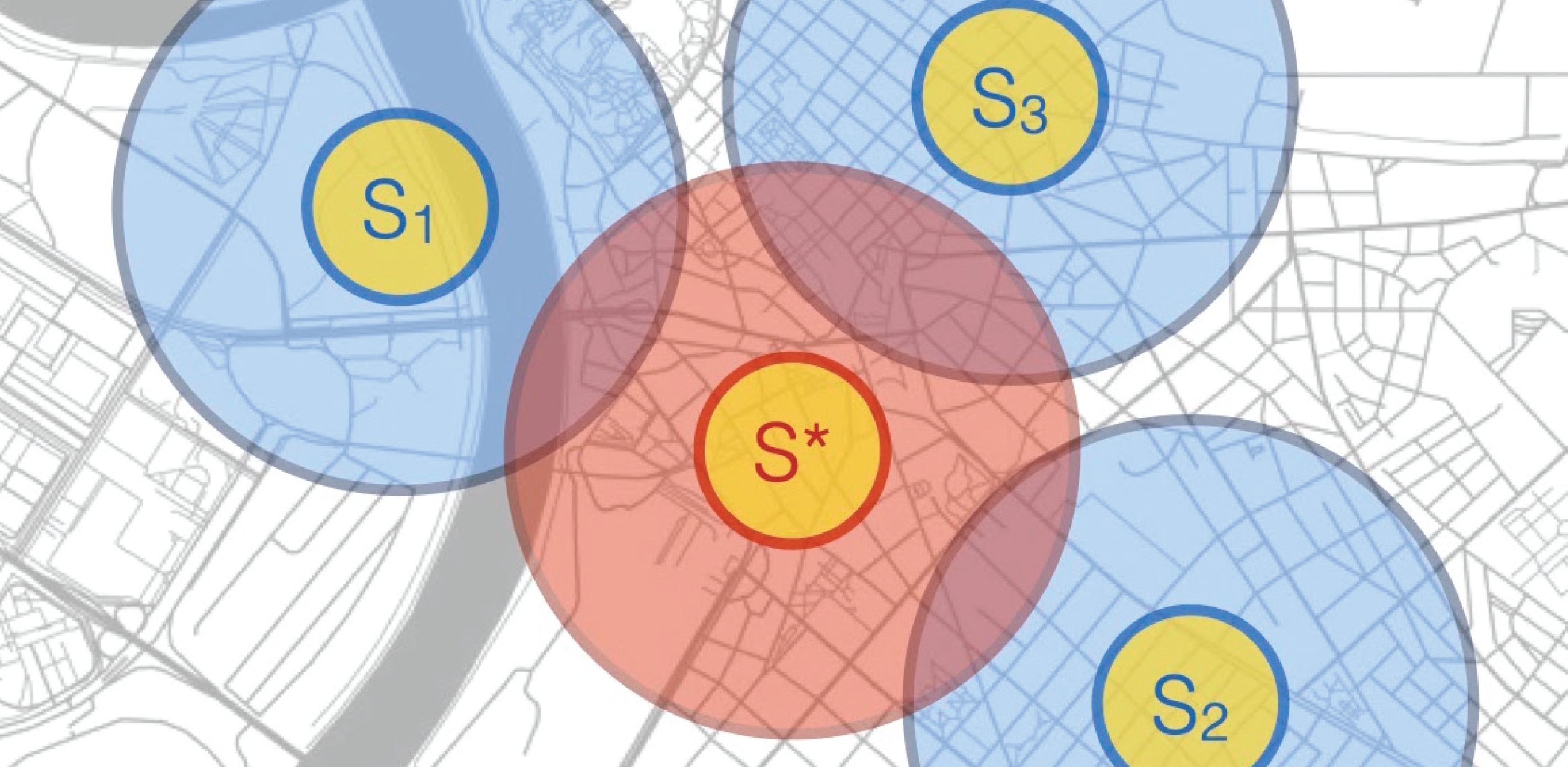

As computational methods pervade all aspects of science and engineering, it is of great importance to understand which discrete formulations can be solved efficiently and how to do so. Many of these problems are notoriously hard, and even those that are theoretically tractable may not scale to large instances. However, the problems of practical interest are often much more well-behaved and possess extra structures that allow them to be amenable to exact or approximate optimization techniques. Just as convexity has been a celebrated and well-studied condition under which continuous optimization is tractable, submodularity is a condition for which discrete objectives may be optimized. In a nutshell, submodularity captures the intuitive diminishing returns notion where the added value of including an element (e.g., image, sensor, etc ) to a context (dataset of images, sensor network, etc) decreases as the context in which it is considered increases.

-

JMLR 2023 How Do You Want Your Greedy: Simultaneous or Repeated? Moran Feldman, Christopher Harshaw, Amin Karbasi -

NeurIPS 2022 Submodular Maximization in Clean Linear Time Wenxin Li, Moran Feldman, Ehsan Kazemi, Amin Karbasi -

COLT 2021 Adaptivity in Adaptive Submodularity Hossein Esfandiari, Amin Karbasi, Vahab S. Mirrokni -

ICML 2021 Regularized Submodular Maximization at Scale Ehsan Kazemi, Shervin Minaee, Moran Feldman, Amin Karbasi -

journal of Mathematics of Operations Research 2021 The Power of Subsampling in Submodular Maximization Christopher Harshaw, Ehsan Kazemi, Moran Feldman, Amin Karbasi -

NeurIPS 2021 An Exponential Improvement on the Memorization Capacity of Deep Threshold Networks Shashank Rajput, Kartik Sreenivasan, Dimitris Papailiopoulos, Amin Karbasi -

NeurIPS 2021 Multiple Descent: Design Your Own Generalization Curve Lin Chen, Yifei Min, Mikhail Belkin, Amin Karbasi -

NeurIPS 2021 Parallelizing Thompson Sampling Amin Karbasi, Vahab Mirrokni, Mohammad Shadravan -

NeurIPS 2021 Submodular + Concave Siddharth Mitra, Moran Feldman, Amin Karbasi -

AISTATS 2020 Black Box Submodular Maximization: Discrete and Continuous Settings Lin Chen, Mingrui Zhang, Hamed Hassani, Amin Karbasi: -

ICML 2020 Streaming Submodular Maximization under a k-Set System Constraint. Ran Haba, Ehsan Kazemi, Moran Feldman, Amin Karbasi: -

IEEE Signal Processing Magazine 2020 Submodularity in Action: From Machine Learning to Signal Processing Applications Ehsan Tohidi, Rouhollah Amiri, Mario Coutino, David Gesbert, Geert Leus, Amin Karbasi -

Journal of Machine Learning Research 2020 Stochastic Conditional Gradient Methods: From Convex Minimization to Submodular Maximization Aryan Mokhtari, Hamed Hassani, Amin Karbasi -

NeurIPS 2020 Continuous Submodular Maximization: Beyond DR-Submodularity Moran Feldman, Amin Karbasi -

NeurIPS 2020 Submodular Maximization Through Barrier Functions | Spotlight Ashwinkumar Badanidiyuru, Amin Karbasi, Ehsan Kazemi, Jan Vondrák -

PhD Thesis 2020 Online Optimization: Convex and Submodular Functions Lin Chen and Amin Karbasi -

PhD Thesis 2020 Modern Challenges for Machine Learning Applications of Submodularity: Privacy, Scalability, and Sequences Marko Mitrovic and Amin Karbasi -

ICML 2019 Submodular Maximization beyond Non-negativity: Guarantees, Fast Algorithms, and Applications | Full Oral Presentation Chris Harshaw, Moran Feldman, Justin Ward, Amin Karbasi: -

ICML 2019 Submodular Streaming in All Its Glory: Tight Approximation, Minimum Memory and Low Adaptive Complexity. Ehsan Kazemi, Marko Mitrovic, Morteza Zadimoghaddam, Silvio Lattanzi, Amin Karbasi -

NeurIPS 2019 Adaptive Sequence Submodularity Marko Mitrovic, Ehsan Kazemi, Moran Feldman, Andreas Krause, Amin Karbasi -

NeurIPS 2019 Stochastic Continuous Greedy ++: When Upper and Lower Bounds Match Amin Karbasi, Hamed Hassani, Aryan Mokhtari, Zebang Shen -

STOC 2019 Unconstrained submodular maximization with constant adaptive complexity Lin Chen, Moran Feldman, Amin Karbasi -

AISTATS 2018 Conditional Gradient Method for Stochastic Submodular Maximization: Closing the Gap Aryan Mokhtari, Hamed Hassani, Amin Karbasi -

AISTATS 2018 Online Continuous Submodular Maximization | Oral Presentation Lin Chen, Hamed Hassani, Amin Karbasi -

AISTATS 2018 Submodularity on Hypergraphs: From Sets to Sequences Marko Mitrovic, Ehsan Kazemi, Morteza Zadimoghaddam, Amin Karbasi -

ICML 2018 Data Summarization at Scale: A Two-Stage Submodular Approach M . Mitrovic, E. Kazemi, M. Zadimoghaddam, A. Karbasi -

ICML 2018 Decentralized Submodular Maximization: Bridging Discrete and Continuous Settings. Aryan Mokhtari, Hamed Hassani, Amin Karbasi: -

ICML 2018 Projection-Free Online Optimization with Stochastic Gradient: From Convexity to Submodularity. Lin Chen, Christopher Harshaw, Hamed Hassani, Amin Karbasi -

ICML 2018 Scalable Deletion-Robust Submodular Maximization: Data Summarization with Privacy and Fairness Constraints. Ehsan Kazemi, Morteza Zadimoghaddam, Amin Karbasi -

ICML 2018 Weakly Submodular Maximization Beyond Cardinality Constraints: Does Randomization Help Greedy? Lin Chen, Moran Feldman, Amin Karbasi -

NeurIPS 2018 Do Less, Get More: Streaming Submodular Maximization with Subsampling | Spotlight presentation Moran Feldman, Amin Karbasi, Ehsan Kazemi -

KDD 2014 Streaming submodular maximization: massive data summarization on the fly. Ashwinkumar Badanidiyuru, Baharan Mirzasoleiman, Amin Karbasi, Andreas Krause -

NeurIPS 2013 Distributed Submodular Maximization: Identifying Representative Elements in Massive Data. Baharan Mirzasoleiman, Amin Karbasi, Rik Sarkar, Andreas Krause