| Title | Date | Host | Records | Info | |

|---|---|---|---|---|---|

| Tutorial on Submodular Optimization: From Discrete to Continuous and Back Speakers | July, 2020 | ICML 2022 | part 1 part 2 part 3 part 4 | ||

|

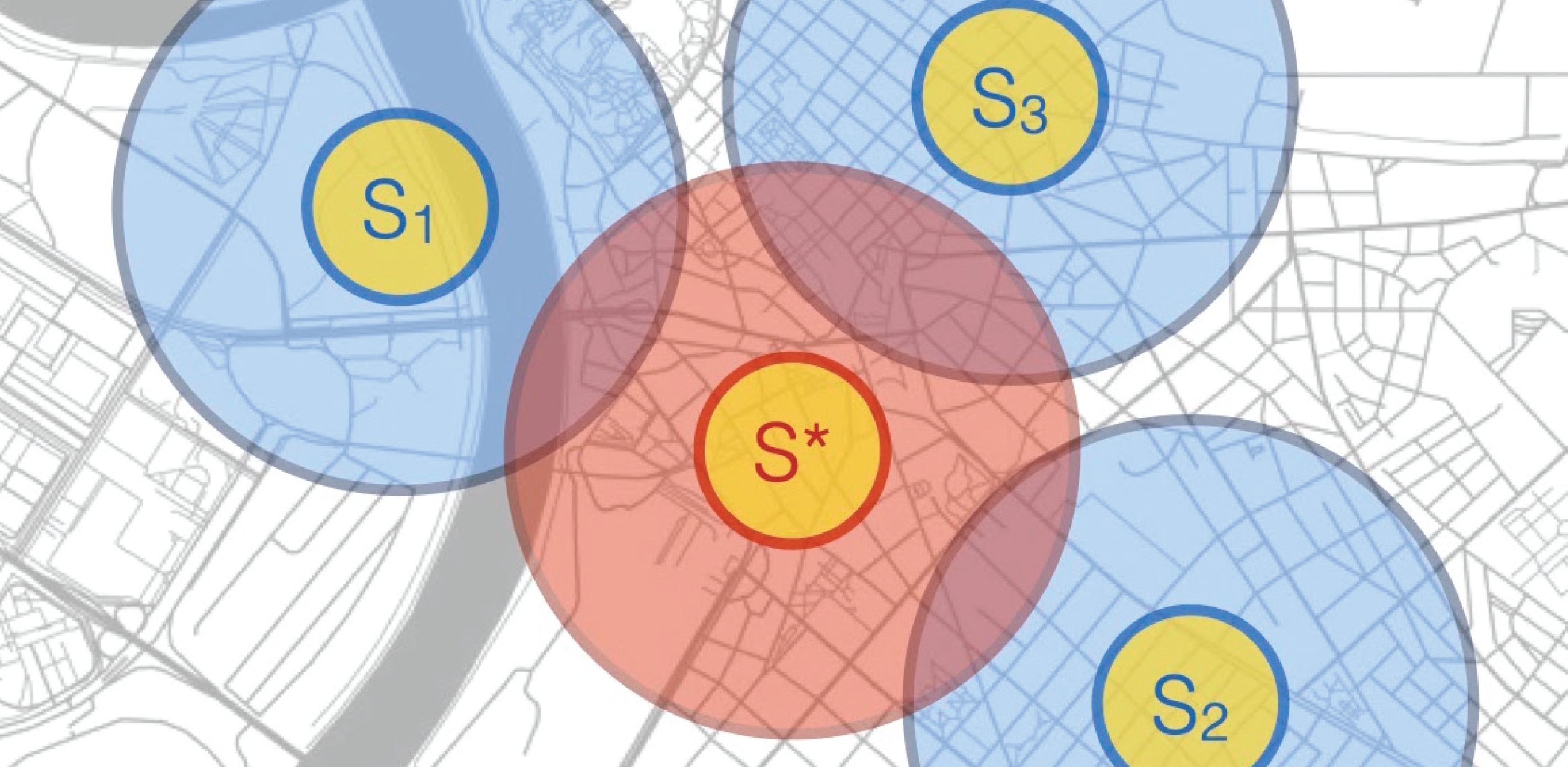

This tutorial will cover recent advancements in discrete optimization methods for large-scale machine learning. Traditionally, machine learning has been harnessing convex optimization to design fast algorithms with provable guarantees for a broad range of applications. In recent years, however, there has been a surge of interest in applications that involve discrete optimization. For discrete domains, the analog of convexity is considered to be submodularity, and the evolving theory of submodular optimization has been a catalyst for progress in extraordinarily varied application areas including active learning and experimental design, vision, sparse reconstruction, graph inference, video analysis, clustering, document summarization, object detection, information retrieval, network inference, interpreting neural network, and discrete adversarial attacks.

As applications and techniques of submodular optimization mature, a fundamental gap between theory and application emerges. In the past decade, paradigms such as large-scale learning, distributed systems, and sequential decision making have enabled a quantum leap in the performance of learning methodologies. Incorporating these paradigms in discrete problems has led to fundamentally new frameworks for submodular optimization. The goal of this tutorial is to cover rigorous and scalable foundations for discrete optimization in complex, dynamic environments, addressing challenges of scalability and uncertainty, and facilitating distributed and sequential learning in broader discrete settings. More specifically, we will cover advancements in four areas:

As applications and techniques of submodular optimization mature, a fundamental gap between theory and application emerges. In the past decade, paradigms such as large-scale learning, distributed systems, and sequential decision making have enabled a quantum leap in the performance of learning methodologies. Incorporating these paradigms in discrete problems has led to fundamentally new frameworks for submodular optimization. The goal of this tutorial is to cover rigorous and scalable foundations for discrete optimization in complex, dynamic environments, addressing challenges of scalability and uncertainty, and facilitating distributed and sequential learning in broader discrete settings. More specifically, we will cover advancements in four areas:

Submodular OptimizationSubmodular Optimization with Perfect Information

Submodular Optimization with Imperfect Information

A Non-Convex Bridge Between Discrete and Continuous Optimization

Beyond Submodularity

|

|||||

| Generative Models AAA: Acceleration, Application, Adversary | Dec, 2024 | Vector Institute 2024 | video | ||

|

Professor Amin Karbasi explores three major challenges in generative AI: computational efficiency, neuroscience applications, and security vulnerabilities. He presents innovative solutions, including linear-time alternatives to quadratic self-attention in transformers, introduces BrainLM, a foundation model for understanding neural activity, and uncovers automated attack risks that threaten AI security. As an Associate Professor at Yale University with joint appointments in Electrical Engineering, Computer Science, and Statistics & Data Science, and a Staff Scientist at Google NY, his research focuses on developing scalable, robust AI systems. His keynote highlights critical advancements in efficient AI deployment and security, shaping the future of trustworthy AI.

|

|||||

| AI Security Interview Series | March, 2024 | Robust Intelligence | video | ||

|

In this talk, Amin Karbasi, Associate Professor at Yale University, and Yaron Singer, CEO of Robust Intelligence, discuss their research on AI security and their Tree of Attacks method. As co-authors of this approach, they explore how their automated system can jailbreak black-box LLMs, particularly those that are inaccessible today. They dive into the challenges of AI security, adversarial attacks, and the implications of automated jailbreaking, providing key insights into the evolving risks and defenses in the world of large language models.

|

|||||

| Learning Under Data Poisoning | Feb, 2024 | Simons Institute | video | ||

|

|

|||||

| Replicability in Interactive Learning | June, 2024 | Hariri Institute for Computing, Boston University | video | ||

|

Amin Karbasi is an Associate Professor of Electrical Engineering, Computer Science, and Statistics & Data Science at Yale University, as well as a Staff Scientist at Google NY. His contributions to machine learning, AI, and data science have earned him numerous prestigious awards, including the NSF Career Award, ONR Young Investigator Award, AFOSR Young Investigator Award, and the DARPA Young Faculty Award. He has also been recognized by leading institutions such as Amazon, Nokia Bell Labs, Google, Microsoft, and the National Academy of Engineering. His research excellence is further highlighted by multiple best paper awards from top conferences like AISTATS, MICCAI, ACM SIGMETRICS, ICASSP, and IEEE ISIT. Karbasi's Ph.D. thesis was awarded the Patrick Denantes Memorial Prize from EPFL, Switzerland, cementing his impact in the field. In this talk, he will explore replicability in interactive learning, addressing its challenges and significance in AI and data-driven decision-making. |

|||||

| Virtual Foundations of Data Science Series | Feb, 2021 | EnCORE | video | ||

|

Submodular functions model the intuitive notion of diminishing returns. Due to their far-reaching applications, they have been rediscovered in many fields such as information theory, operations research, statistical physics, economics, and machine learning. They also enjoy computational tractability as they can be minimized exactly or maximized approximately. The goal of this talk is simple. We see how a little bit of randomness, a little bit of greediness, and the right combination can lead to pretty good methods for offline, streaming, and distributed solutions. I do not assume any background on submodularity and try to explain all the required details during the talk. |

|||||

| Sequential Decision Making: How Much Adaptivity Is Needed Anyways? | Feb, 2022 | Communications and Signal Processing Seminar Series | video | ||

|

Adaptive stochastic optimization under partial observability is one of the fundamental challenges in artificial intelligence and machine learning with a wide range of applications, including active learning, optimal experimental design, interactive recommendations, viral marketing, Wikipedia link prediction, and perception in robotics, to name a few. In such problems, one needs to adaptively make a sequence of decisions while taking into account the stochastic observations collected in previous rounds. For instance, in active learning, the goal is to learn a classifier by carefully requesting as few labels as possible from a set of unlabeled data points. Similarly, in experimental design, a practitioner may conduct a series of tests in order to reach a conclusion. Even though it is possible to determine all the selections ahead of time before any observations take place (e.g., select all the data points at once or conduct all the medical tests simultaneously), so-called a priori selection, it is more efficient to consider a fully adaptive procedure that exploits the information obtained from past selections in order to make a new selection. In this talk, we introduce semi-adaptive policies, for a wide range of decision-making problems, that enjoy the power of fully sequential procedures while performing exponentially fewer adaptive rounds. |

|||||

| Submodularity in Information and Data Science | Feb, 2019 | IEEE Information Theory Society | video | ||

|

Submodularity is a structural property over functions that has received significant attention in the mathematics community, owing to their natural and wide ranging applicability. In particular, numerous challenging problems in information theory, machine learning, and artificial intelligence rely on optimization techniques for which submodularity is key to solving them efficiently. We will start by defining submodularity and polymatroidality — we will survey a surprisingly diverse set of functions that are submodular and operations that preserve submodularity. Next we look at submodular functions that have been classically used as information measures and their novel applications in ML and AI including active/semi-supervised learning, structured sparsity, data summarization, and combinatorial independence and generalized entropy. Subsequently, we’ll define the submodular polytope, and its relationship to the various greedy algorithms and its exact and efficient solution to certain linear programs. We will see how submodularity shares certain properties with convexity (efficient minimization, discrete separation, subdifferentials, lattices and sub-lattices, and the convexity of the Lovasz extension), concavity (via its definition, submodularity via concave functions, superdifferentials), and neither (simultaneous suband super-differentials, efficient approximate maximization). Finally, we will discuss some extensions of submodularity that have proven useful in recent years. |

|||||

| How to (or not to) Run a Vaccination Trial | Feb, 2023 | ICON Purdue | video | ||

|

|

|||||